The F# PowerPack comes with the FsLex and FsYacc tools that make it quite easy to create a parser for domain-specific languages, or just to parse the contents of complex text files. A previous post had an example that took the contents of a spread sheet cell and converted it into an abstract syntax tree (AST) and also some code to walk that AST to produce a string representation that closely resembled Lisp syntax.

The parser from the example creates a syntax tree whose nodes are expressed as F# Discriminated Unions. Processing such a tree in F# is very easy and straightforward, because an AST that is built this way lends itself to be walked using recursion and pattern matching, which are obvious strengths of the programming language.

Things get a wee bit uglier when you just use F# for the lexing and parsing bit, but your main program is written in another .NET language (C# comes to mind). Discriminated unions are a speciality of F# and have no equivalent in C#, meaning that you need some strategy to deal with this situation, which is probably not a very rare one.

Discriminated Union Internals

The definition of a discriminated union on the Microsoft web site looks like this:

type type-name = | case-identifier1 [of type1 [ * type2 ...] | case-identifier2 [of type3 [ * type4 ...] ...

One would suspect that there must be some way to represent such a data structure in the CLR, and a bit of digging in an F# assembly shows that the underlying implementation is realised through a class hierarchy and inheritance, which after all is the CLR’s programming paradigm and is comprehensible to all languages that run on the platform.

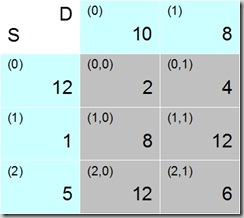

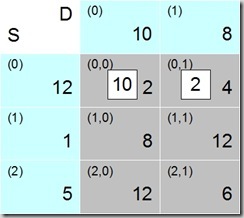

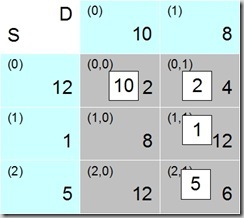

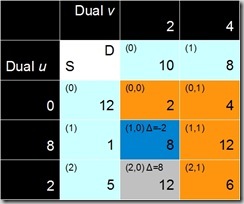

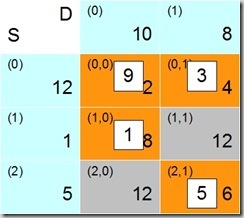

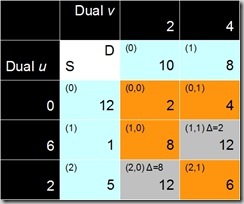

Fig. 1

The type name as defined in the F# program, plus all the case identifiers are represented by classes. Each case identifier gets its own class that derives from a common base class, which itself corresponds to the F# discriminated union type. To keep things neatly together, all the derived classes (the case identifiers) are nested within the base class.

On the F# side, each of the case identifiers can have a type that determines what sort of data it can hold. Sometimes, a case identifier does not have a data type and only serves as a ”typed marker”, as is the case with the None case of an option type.

From the point of view of the CLR, the data associated with a case identifier become Item properties of the corresponding class. The type of the Item property is the one defined in the DU, and if the type happens to be a tuple, then each of the tuple elements becomes a separate property Item1…Itemn.

Consider the following example definition and values for a DU type:

module public Module1

type SomeUnion =

| AWholeNumber of int

| AString of string

| AStringTuple of string * string

| AStringList of string list

| AStringArray of string array

let aWholeNumber = AWholeNumber(12)

let aString = AString("twelve")

let aStringTuple = AStringTuple("one", "two")

let aStringList = AStringList(["one";"two"])

let aStringArray = AStringArray([|"one";"two"|])

All the value bindings in this code are of type SomeUnion to the CLR (and to a C# program that wants to access them). SomeUnion corresponds to <type-name> in the class diagram above. The actual type of each value, however, is given by the constructor that was used in the F# code, i.e. the actual types are AWholeNumber, AString, AStringTuple, AStringList, or AStringArray, each of which derive from SomeUnion.

A C# program that wants to access them first needs to downcast from the type SomeUnion to the correct runtime type and can then use the Item properties to get the data:

int aWholeNumber = ((Module1.SomeUnion.AWholeNumber)Module1.aWholeNumber).Item;

string aString = ((Module1.SomeUnion.AString)Module1.aString).Item;

Tuple<string, string> aStringTuple = Tuple.Create(

((Module1.SomeUnion.AStringTuple)Module1.aStringTuple).Item1,

((Module1.SomeUnion.AStringTuple)Module1.aStringTuple).Item2);

IEnumerable<string> aStringList =

((Module1.SomeUnion.AStringList)Module1.aStringList).Item;

string[] aStringArray =

((Module1.SomeUnion.AStringArray)Module1.aStringArray).Item;

Two observations here: You can’t really use F# lists in C# code, but they can always be treated as type IEnumerable<>, which they are. In order to make this work, however, you need to add a reference to the FSharp.Core assembly to the C# project. Secondly, the way to make a C# tuple out of the F# tuple is supremely clumsy; structurally, they are the same, so there should be a more elegant way to assign one to the other[1]. Also, in this example and in those that follow, variables are declared with their full types. In practice, one would use type inference and just declare them as var, but in the examples it is easier to see what is happening when full type names are given.

In the example above, the runtime types of all the values are known in advance, so the casting doesn’t pose any problems. When dealing with real-world F# discriminated unions, one needs to take into account that the actual type first needs to be determined in order for the casts to be successful. Three mechanisms are available:

Module1.SomeUnion.AWholeNumber aNumber = Module1.aValue as Module1.SomeUnion.AWholeNumber;

if (aNumber != null)

{

// now we know we can cast

}

We can use the C# as keyword to try and cast; if the cast to this particular class is not successful, we try the next etc.

if (Module1.aValue.IsAWholeNumber)

{

// now we know we can cast

}

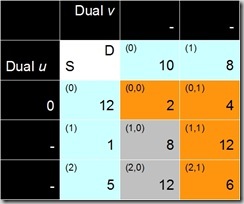

The base class also has an Is<case-identifier> property for every derived class, in our case there is an IsAWholeNumber, IsAString, IsAStringTuple, IsAStringList, and an IsAStringArray property, so every single possibility can be checked. Internally, this uses the previous technique to try and cast to a particular type, and returns false if that cast fails.

switch (Module1.aValue.Tag)

{

case Module1.SomeUnion.Tags.AWholeNumber:

// cast here

break;

case Module1.SomeUnion.Tags.AString:

// or cast here

break;

// etc.

}

Last but not least, a big switch statement on the Tag property of the base class SomeUnion will do. The value of this property is an integer that specifies the actual class being used; there is a corresponding nested struct Tags that contains one int const value with the name of each of the subclasses/case identifiers and can be used for the branches in the switch. After figuring out what the actual class is, cast the value into it, and then access the Item property, or the Itemn properties as appropriate.

Walking the AST in C#

Looking back at the original post on FsYacc, the parser created an AST with the following (recursive) structure:type Cell =

| FormulaCell of Expression

| ConstantCell of Value

and Expression =

| CellRef of Reference

| Function of String * Expression

| Constant of Value

| InfixOperation of BinaryOperator

and BinaryOperator =

| Plus of Expression * Expression

| Minus of Expression * Expression

| Times of Expression * Expression

| Divide of Expression * Expression

and Value =

| Float of Double

| Integer of Int32

and Reference =

| SingleCellRef of String

| RangeCellRef of String * String

Armed with the knowledge on how to access discriminated unions and their contents, the F# walker function that converts the AST into a string representation now practically rewrites itself in C#.

public static string format(Ast.Cell cell)

{

switch (cell.Tag)

{

case Ast.Cell.Tags.FormulaCell:

return format(((Ast.Cell.FormulaCell)cell).Item);

case Ast.Cell.Tags.ConstantCell:

return format(((Ast.Cell.ConstantCell)cell).Item);

}

return "<unknown cell type>";

}

public static string format(Ast.Expression expression)

{

switch (expression.Tag)

{

case Ast.Expression.Tags.CellRef:

return format(((Ast.Expression.CellRef)expression).Item);

case Ast.Expression.Tags.Function:

{

var fn = (Ast.Expression.Function)expression;

return "(" + fn.Item1 + "," + format(fn.Item2) + ")";

}

case Ast.Expression.Tags.Constant:

return format(((Ast.Expression.Constant)expression).Item);

case Ast.Expression.Tags.InfixOperation:

return format(((Ast.Expression.InfixOperation)expression).Item);

case Ast.Expression.Tags.ListExpression:

{

var sb = new System.Text.StringBuilder();

foreach (var elem in ((Ast.Expression.ListExpression)expression).Item)

{

if (sb.Length > 0)

sb.Append(',');

sb.Append(format(elem));

}

return sb.ToString();

}

}

return "<unknown expression type>";

}

public static string format(Ast.Value value)

{

switch (value.Tag)

{

case Ast.Value.Tags.Float:

return ((Ast.Value.Float)value).Item.ToString();

case Ast.Value.Tags.Integer:

return ((Ast.Value.Integer)value).Item.ToString();

}

return "<unknown value type>";

}

public static string format(Ast.Reference reference)

{

switch(reference.Tag)

{

case Ast.Reference.Tags.SingleCellRef:

return ((Ast.Reference.SingleCellRef)reference).Item;

case Ast.Reference.Tags.RangeCellRef:

{

var range = (Ast.Reference.RangeCellRef)reference;

return "(" + range.Item1 + "," + range.Item2 + ")";

}

}

return "<unknown reference type>";

}

public static string format(Ast.BinaryOperator op)

{

switch (op.Tag)

{

case Ast.BinaryOperator.Tags.Plus:

{

var pair = (Ast.BinaryOperator.Plus)op;

return "(+," + format(pair.Item1) + "," + format(pair.Item2) + ")";

}

case Ast.BinaryOperator.Tags.Minus:

{

var pair = (Ast.BinaryOperator.Minus)op;

return "(-," + format(pair.Item1) + "," + format(pair.Item2) + ")";

}

case Ast.BinaryOperator.Tags.Times:

{

var pair = (Ast.BinaryOperator.Times)op;

return "(*," + format(pair.Item1) + "," + format(pair.Item2) + ")";

}

case Ast.BinaryOperator.Tags.Divide:

{

var pair = (Ast.BinaryOperator.Divide)op;

return "(/," + format(pair.Item1) + "," + format(pair.Item2) + ")";

}

}

return "<unknown binary operator>";

}

The next installment will explore other ways to use FsYacc from a C# project, without having to look into the internals of F# datatypes.

[1] Clarification on Tuples

The remark about not being able to assign the F# tuples to C# tuples only holds in the context of discriminated unions. As for “normal” tuples, they are perfectly interoperable and the same CLR data type, so if you have a value in your F# code

module public Module1

let aTuple = ("abc", "def")

This can be used without problems in C# as

Tuple<string, string> tuple = Module1.aTuple;

Given this fact, it is an even greater pity that it doesn’t work with DUs. If the “tuple-type” cases were not implemented with a number of different Itemn properties, but with a single Item property of type Tuple<>, this could have been so much more elegant. The current implementation is probably due to the fact that the generic Tuple<> class is relatively new to C# and the CLR (this came with .NET 4), and F# tuples predate it by a few years, so interoperability wasn’t an issue at the time.

Fig. 1

Fig. 1

Fig. 14

Fig. 14